How to fix the unknown partition error in Kafka

A look at the most common causes of Kafka's "unknown topic or partition" error along with practical steps and solutions to help you fix it.

Introduction

Kafka is a popular distributed data streaming solution used by many companies worldwide to process and transmit large volumes of data. However, Kafka's distributed nature can sometimes make it a complex system to work with, as it requires communication between its various components. Due to this, your Kafka deployments may encounter several issues that require confirmation of correct connections and communications.

One of the most common errors in Apache Kafka is related to broker issues, where producers or consumers are unable to connect or where the liveness check fails. Other common errors include issues with upscaling and rebalancing consumer groups, topics, and partitions. Incorrect offsets can also lead to issues with reading partitions.

The "unknown topic or partition" error in Kafka occurs when a producer or consumer is unable to find or connect with your selected topic or partition. This error can occur for various reasons, such as:

- The topic or partition does not exist or is not available

- The partition or topic exists on a specific broker that is not available

- A new partition has been added to the topic

- The partition has been reassigned due to a failed or new broker

- Incorrect definition of access control lists (ACLs) or access rights

In this article, you'll explore the error in Kafka, the possible reasons behind the error, and how to fix it.

What Causes the "Unknown Topic or Partition" Error?

The "unknown topic or partition" error is a common error when working with Apache Kafka. It typically occurs when a producer or consumer can't locate or connect to a specific topic or partition within the Kafka cluster. If not fixed promptly, it can potentially wreak havoc by causing unexpected downtime and data loss. The following are some of the most common causes of this error.

Topic or partition does not exist

One of the main reasons for this error is the actual absence of the selected topic or partition in the Kafka cluster. This can happen if there are typos in the topic name or partition number, or if the topic or partition was recently created and is still unavailable.

Failures in Kafka's automatic topic creation mechanism can also contribute to this error. Additionally, data loss from broker failures can lead to the topic or partition being unavailable.

Topic or partition not available on specific kafka broker

This error can also be caused by the unavailability of the partition on the specific broker being queried. Kafka's replication factor enables duplicate topics and their partitions to be available across multiple brokers on your Kafka cluster. However, depending on the configurations of the brokers and topics, it's possible that the desired partition may not be present on the brokers that are currently available.

Incorrect ACL or authorization

Kafka relies on access control lists (ACLs) to grant access to specific resources or groups of resources. If your Kafka service is using an incorrect ACL or doesn't have appropriate authorization to access the topic or partition you have selected, you may also get the "unknown topic or partition" error.

Repartitioning or partition reassignment

Lastly, repartitioning, where new partitions are created for an existing topic, and partition reassignment, where existing partitions are moved from one broker to another within your Kafka cluster, can also affect the availability and location of partitions, potentially leading to the "unknown topic or partition" error.

Solving the "Unknown Topic or Partition" error

Now that you know some of the most common causes of the "unknown topic or partition" error, the next sections provide practical steps and solutions to help you fix it.

Confirm topic or partition details

The first thing to do when you get an "unknown topic or partition" error is confirm the details of your topic or partition. Make sure you've got the right name for your topic or partition. Then, check if that topic or partition actually exists. You can use a Kafka command line tool to list all the created topics in your Kafka deployment by running the following command:

Once you have confirmed the topic name, you can dive deeper into the topic details to check the partitions and replicas by running the following command:

This will provide information on the partition count, replication factor, leader, and replicas for the specified topic. This way, you can confirm that the topic or partition exists and its details are correct, eliminating one possible cause of the error.

Verify authorization and access rights

Kafka uses ACLs to ensure that users and services are authorized to use resources within your Kafka cluster. If a service or user doesn't have access to a topic or partition, it might encounter the "unknown topic or partition" error, which makes it seem like the resource doesn't exist.

To fix this, check the ACLs connected to the topic and see if your service or user has the necessary access.

You can use the Kafka command line tool 'kafka-acls.sh' to list the ACLs configured for a topic or partition, allowing you to verify access permissions:

You can add any users that aren't listed in the ACL using your command line tool. For example, the following command adds a sample user as a producer to a selected topic:

Refresh topic metadata

After you create a topic in Kafka, it can sometimes take a while for this information to reach all the brokers. This delay is more common in larger Kafka clusters with many brokers. As a result, you might encounter the "unknown topic or partition" error when using producers or consumers. However, you can resolve this problem by manually triggering a metadata request to update the information and fix the issue.

Kafka services automatically refresh the metadata they work with, but the time between refreshes can be adjusted by modifying settings such as topic.metadata.refresh.interval.ms, max.poll.interval.ms, and metadata.max.age.ms for producers, consumers, and consumer groups. These settings allow you to fine-tune the frequency of metadata refreshes and ensure that your Kafka deployment stays up-to-date. This reduces the chances of encountering "unknown topic or partition" errors caused by outdated metadata.

Check your current reassignment strategy

The default partition reassignment process may not be very efficient for your Kafka cluster. For instance, when a consumer joins or leaves a consumer group, the Kafka brokers rebalance the partitions among consumers.

The default process, known as the eager protocol, involves dropping all existing partitions before assigning new ones, which can result in considerable downtime. If your application relies on partition state data, you'll need to reconfigure it with the new partitions, or you might encounter the "unknown topic or partition" error.

You can tweak the reassignment strategy using the partition.assignment.strategy property. You can also manually reassign your partitions between available brokers using the command line with the kafka-reassign-partitions tool.

Check for broker failures and data loss

Sometimes, the "unknown topic or partition" error in Kafka can be caused by issues such as broker failures or data loss within your Kafka cluster.

To troubleshoot and resolve this error, you can follow these steps:

- Check the health and status of your Kafka brokers: Make sure that all the required brokers are up and running without any errors.

- Monitor the Kafka logs for any indications of failures or issues: The logs can provide valuable insights into the health of your cluster and help identify any underlying issues.

- Validate the data integrity of your topics and partitions: Ensure that the data is intact and that there are no inconsistencies or instances of data loss. If there has been a broker failure or data loss, it might result in the unavailability of certain topics or partitions.

- Verify that the replication factor is properly set and the replicas are in a healthy state: This only applies if you have configured replication for your topics. Kafka replication helps ensure data availability and resilience to failures.

To check the replication factor, use the ‘--describe’ command covered in a previous section (“Confirm Topic or Partition Details”).

Conclusion

This article explored the common "unknown topic or partition" error in Kafka and discussed various reasons it might occur in your Kafka deployment. These reasons include the absence or unavailability of the topic or partition, specific Kafka broker problems, partition reassignment and repartitioning, and incorrect access rights. You also saw possible solutions for this error, including verifying topic and partition details, refreshing topic metadata, reviewing ACLs, and managing partition additions and updates to ensure proper synchronization.

Kafka is a complex system with interconnected components that require careful management to ensure smooth operation. While some errors have clear definitions and can be resolved easily, resolving others might require attempting multiple approaches or searching user forums.

For instance, if you encounter an "unknown topic or partition" error, it might take some time to work through the potential causes and find an effective solution. Fortunately, there are managed Kafka platforms (such as Confluent, Amazon MSK, or Aiven for Apache Kafka) that streamline your Kafka deployment and allow you to leverage efficient data pipelines without getting bogged down with the nitty-gritty of Kafka administration .

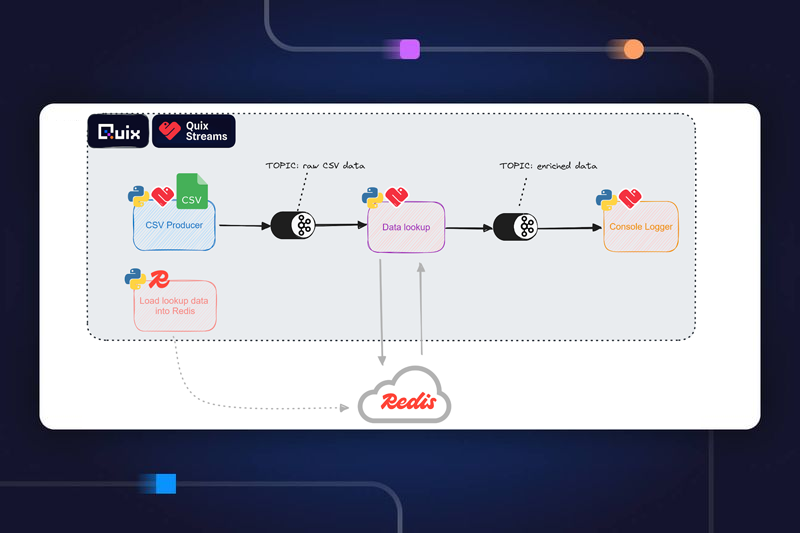

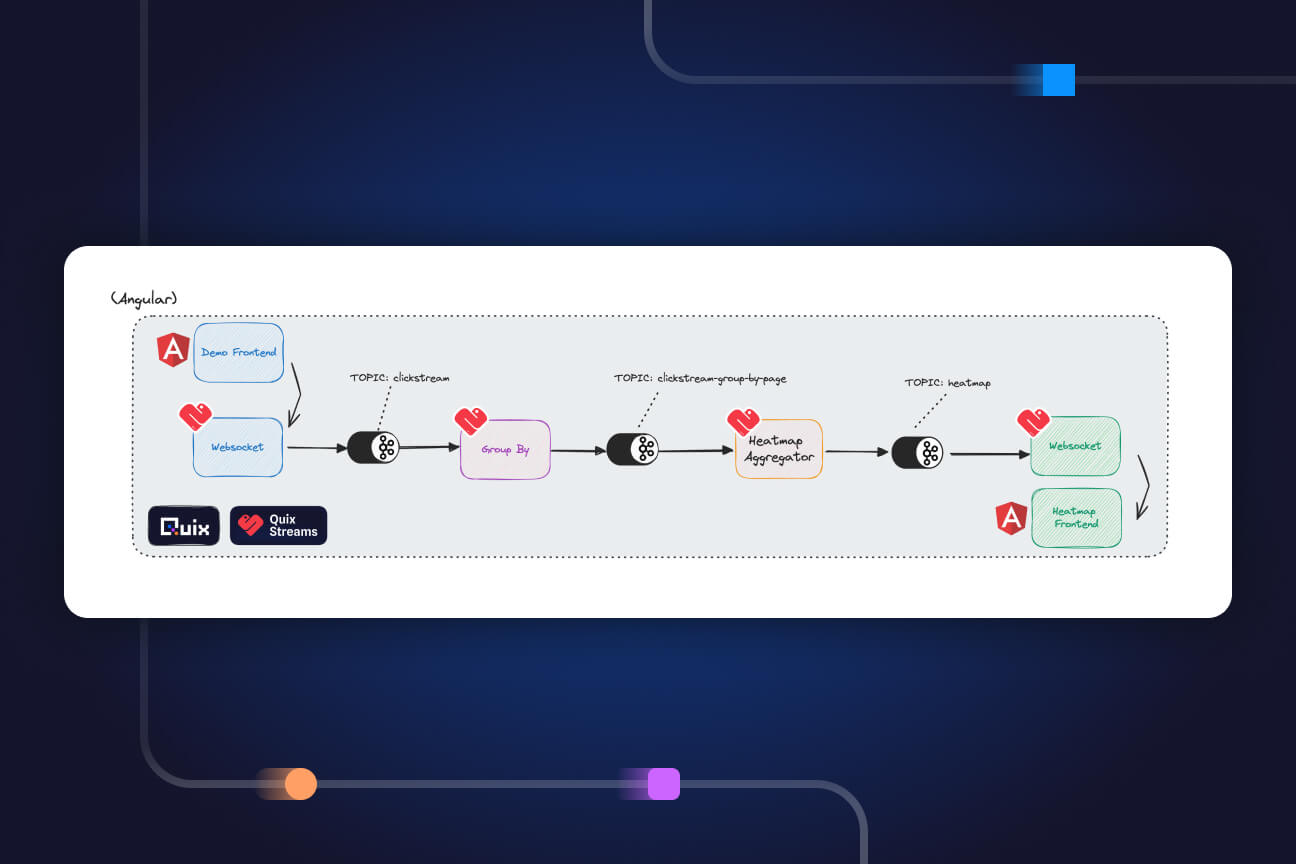

If you're looking for a self-orchestrating data platform to build mission-critical products powered by real-time data, consider Quix. With Quix, you can develop applications using serverless functions in days instead of months. It enables data teams to work on streaming data directly in Kafka using Python and Kubernetes. Quix supports C# or Python development on a reliable serverless platform powered by Kafka, Kubernetes and the Quix Streams client library.

Check out the repo

Our Python client library is open source, and brings DataFrames and the Python ecosystem to stream processing.

Interested in Quix Cloud?

Take a look around and explore the features of our platform.

Interested in Quix Cloud?

Take a look around and explore the features of our platform.

.svg)