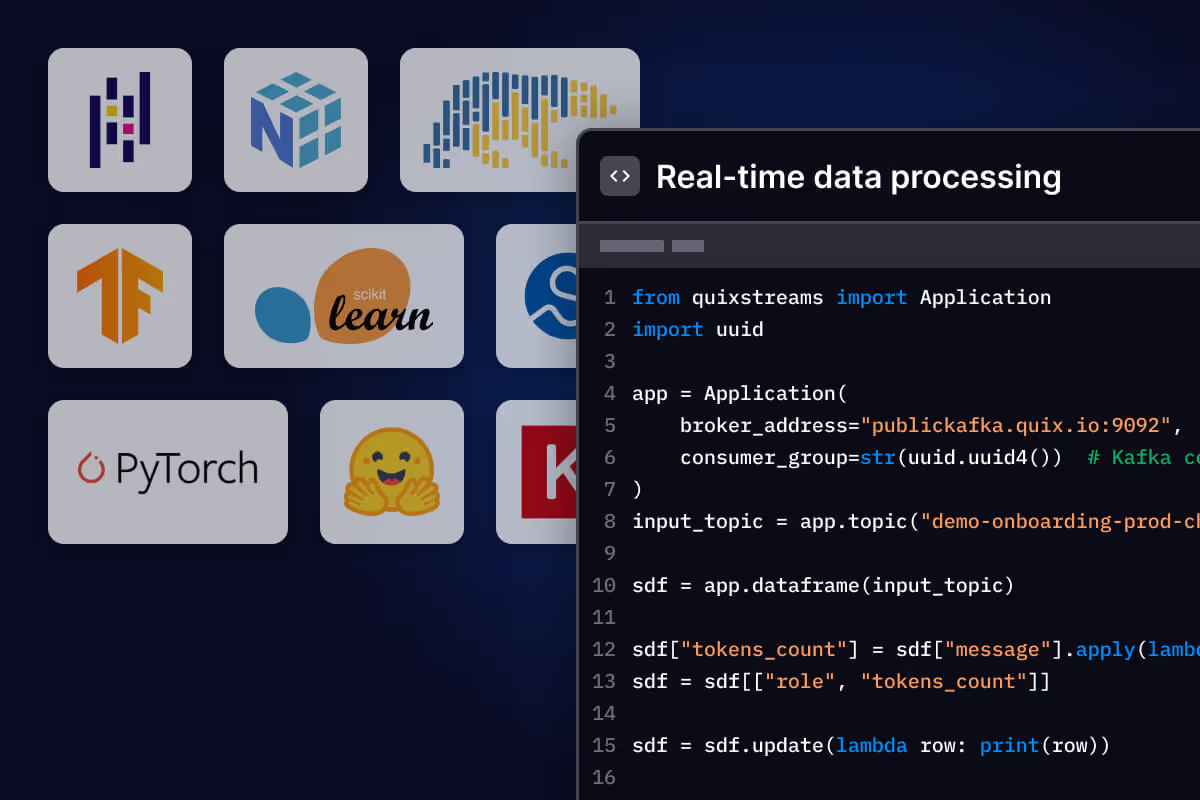

Develop in pure Python

No Java dependencies, no more hassle debugging your Spark or Flink jobs. Use Python to create your own tasks and workflows. Import your favourite Python libraries for real-time processing and enjoy the ease of developing, testing and debugging your application in pure Python.

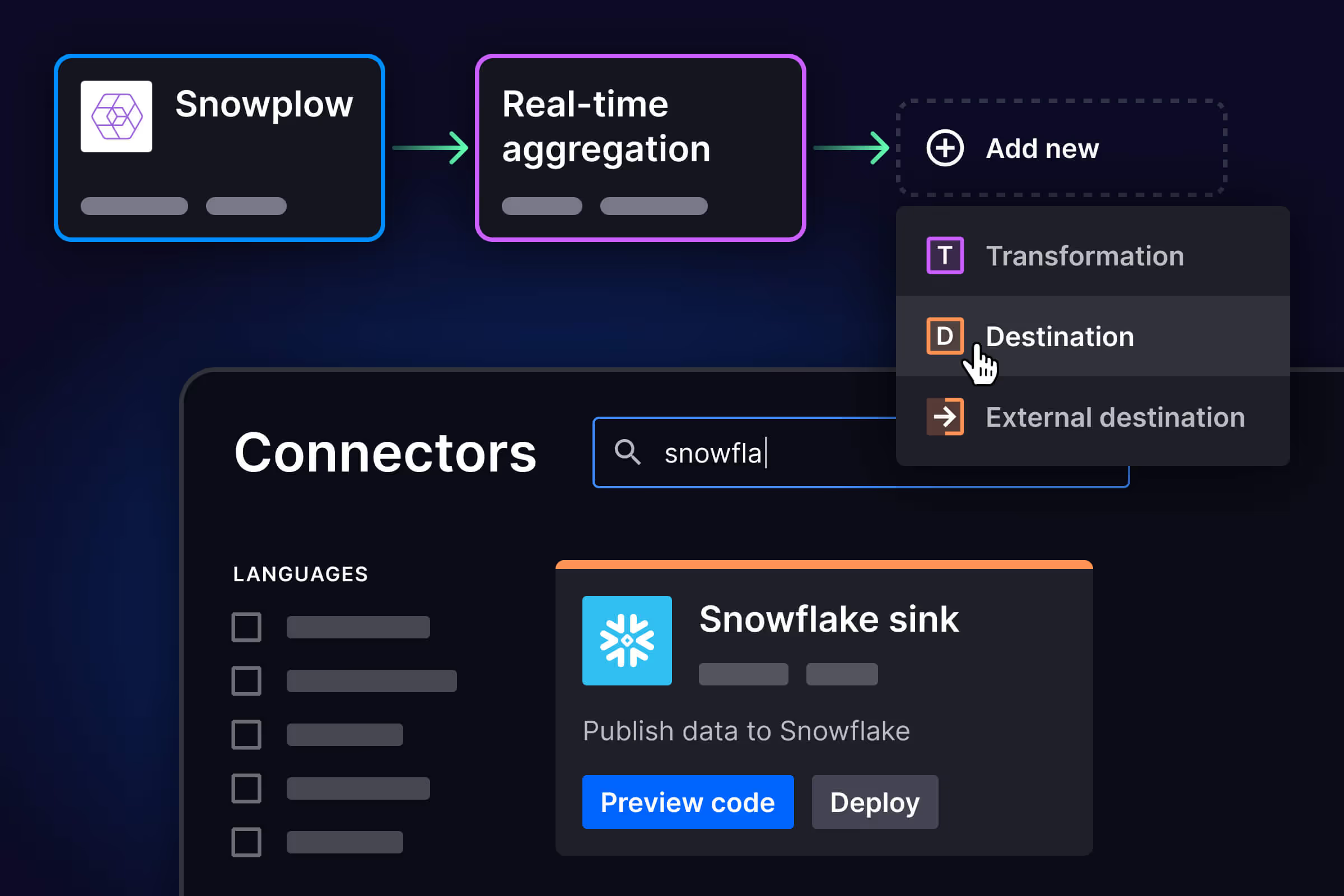

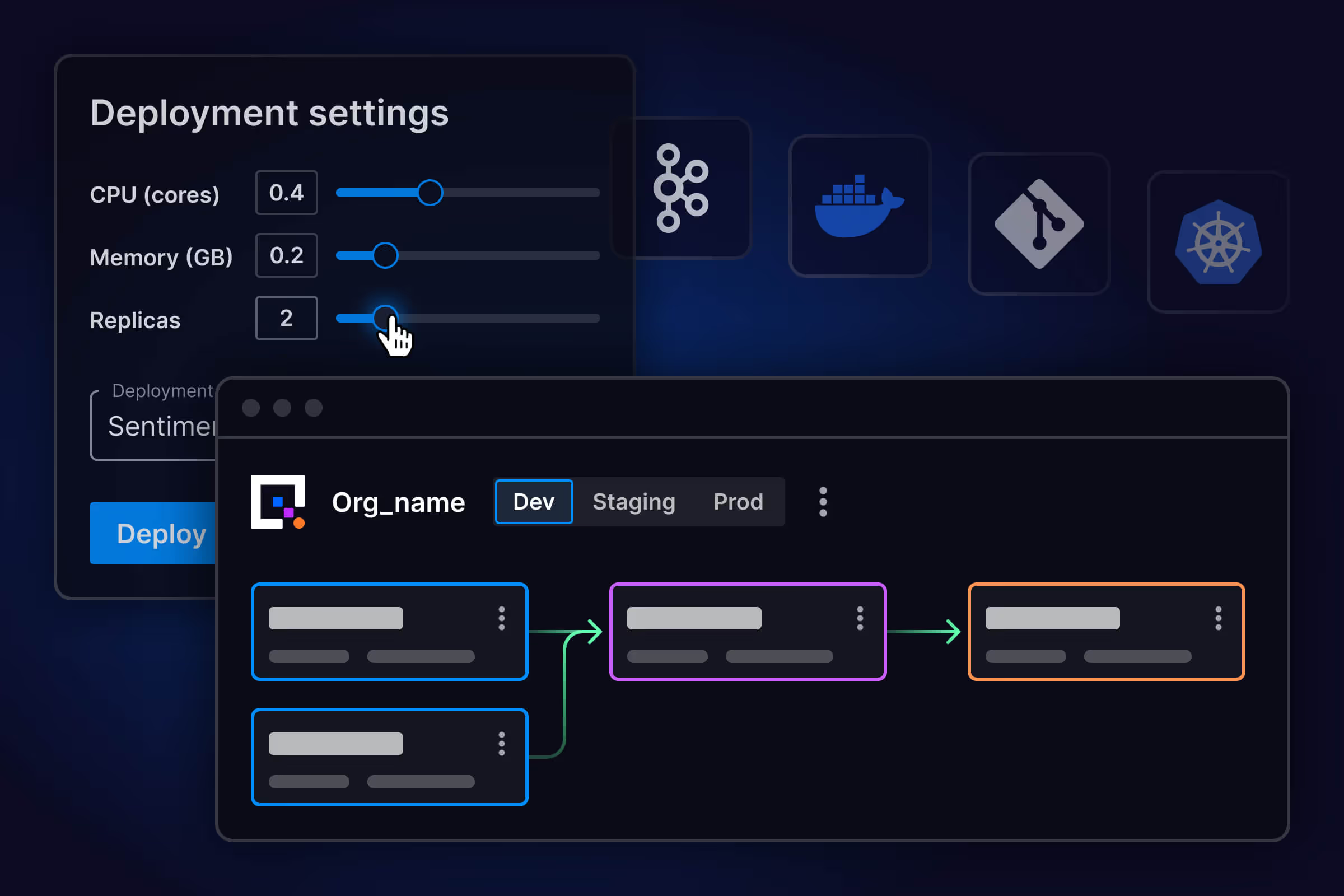

Quix is based on Docker so it's easy to add system dependencies or libraries to support your application. Quickly get started using pre-configured transformation and connector templates. Integrate your data with our open source plug-and-play connectors or build your own. This makes Quix easy to apply to your current infrastructure on AWS, GCP, Microsoft Azure and many other third-party services.

.svg)